Paper Review 4: Going Deeper with Convolutions (GoogLeNet)

Motivation

Overcome two drawbacks of bi202g network

- Bigger Network = Prone to overfitting

- Computational cost

Idea

Moving from fully connected to sparsely connected architectures → Make the model “sparser” as well as “less computational”

Then we need to find out optimal sparse structure (below)

Architecture

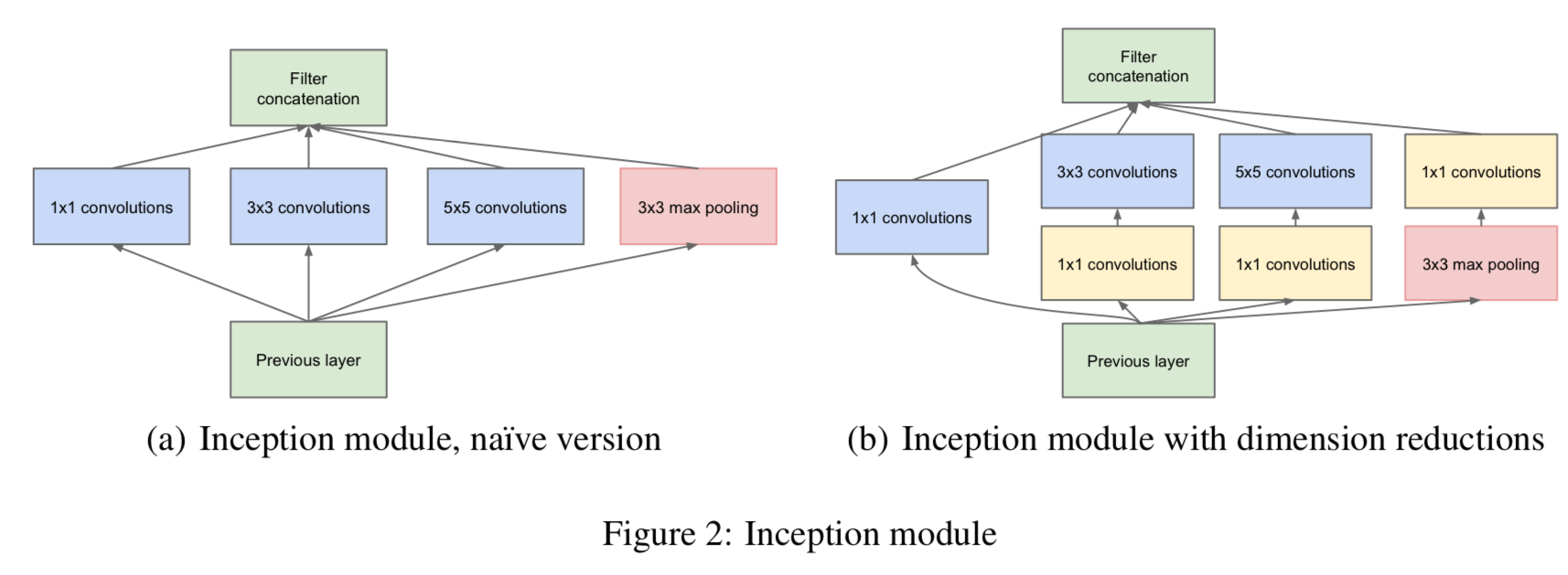

- Approximate optimal sparse structure by readily available dense building blocks (a)

Make to have less computational cost (b)

- Apply dimension reduction and projections (1x1 conv)