Summary

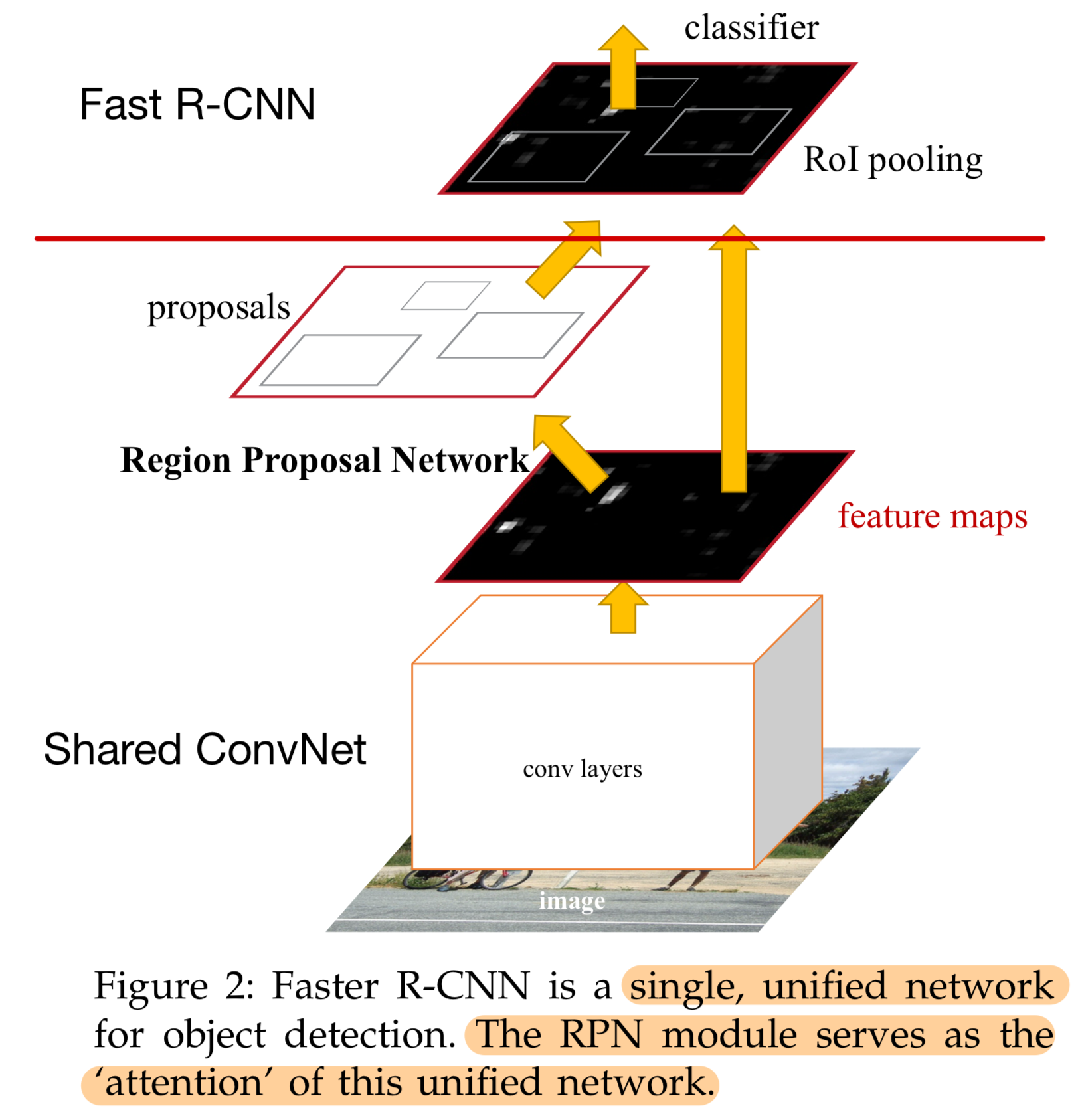

Introduced Region Proposal Network (RPN) which shares CNN with detection network. RPN is CNN that simultaneously predicts object bounds and objectness scores at each position

1. Motivation

Proposals were computational bottleneck → Convert this into deep conv net

2. Architecture

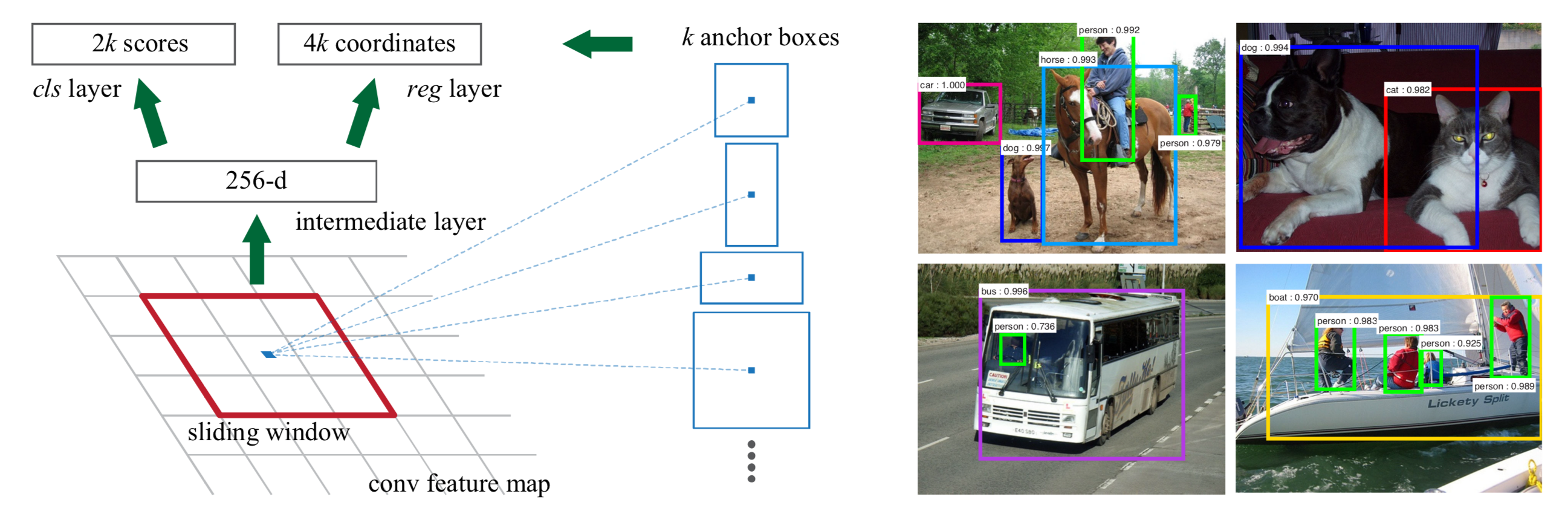

2-1. Region Proposal Network (RPN)

Input: image of any size

Output: Set of rectangular object proposals each with objectness score

Proposal

- Slide small network over Conv feature map

- For each sliding window, there are k anchor boxes

- Extracted features are fed into box-regression(reg) and box-classification(cls) layer

Anchors

Anchor is centered at sliding window and is associated with a scale and aspect ratio

- Sliding window로 box 추론 → K개의 anchor box에 맞추기 → K개의 Proposal

Loss Function

- Label

Anchors that are neither pos of neg don’t contribute to training objective

- Positive label

- Anchors with highest IoU with ground-truth box

- Anchor with IoU overlap higher than 0.7 with some ground-truth box

- Negative label

- Anchor with IoU < 0.3 for all ground-truth boxes

- Positive label

-

Loss Function

\[L(\{p_i\},\{t_i\})=\frac{1}{N_{\text{cls}}}\sum_iL_{\text{cls}}(p_i,p_i^*)+λ\frac{1}{N_{\text{reg}}}\sum_ip_i^*L_{\text{reg}}(t_i,t_i^*)\] \[p_i^*=\text{ground-truth label, 1 if anchor positive, 0 if negative}\\ t_i=\text{predicted bounding box}\\ t_i^*=\text{ground-truth box associated with positive anchor}\\ L_{\text{cls}}=\text{log loss over 2 classes}\\ L_{\text{reg}}=\text{Regression loss with smooth } L_1\\ λ=\text{balancing parameter}\]-

parameterizations of 4 coordinates for bounding box regression

\[t_x=(x-x_a)/w_a, \ t_y=(y-y_a)/h_a\\ t_w=\log(w/w_a), \ t_h=\log(h/h_a)\\ t_x^*=(x^*-x_a)/w_a, \ t_y^*=(y^*-y_a)/h_a\\ t_w^*=\log(w^*/w_a), \ t_h^*=\log(h^*/h_a)\]x = predicted box, x_a = anchor box, x* = ground-truth box

-

Training

-

There are bias towards negative samples as they are dominate

→ Randomly sample 256 anchors in an image to compute loss function of mini-batch where sampled positive and negative anchors have ratio of up to 1:1

2-2. Sharing Features for RPN and Fast R-CNN

It was hard to calculate derivative of RoI pooling layer w.r.t. box coordinates. So adopt 4-Step Alternating Training.

4-Step Alternating Training

- Train RPN

- Train detection network (Fast R-CNN) using proposals generated by RPN

- Use detection network to initialize RPN training while fixing the shared conv layers and only fine-tune layers unique to RPN

- Keeping shared ConvNet layers fixed, fine-tune unique layers of Fast R-CNN