Summary

This paper analyzes Gated RNN and grConv(which is introduced in this paper) to show

- Performance is degraded

- when length of the sentence increases

- when the number of unknown words increases

- grConv learns grammatical structure of sentence automatically (= w/o supervision)

Neural Network for Sequences

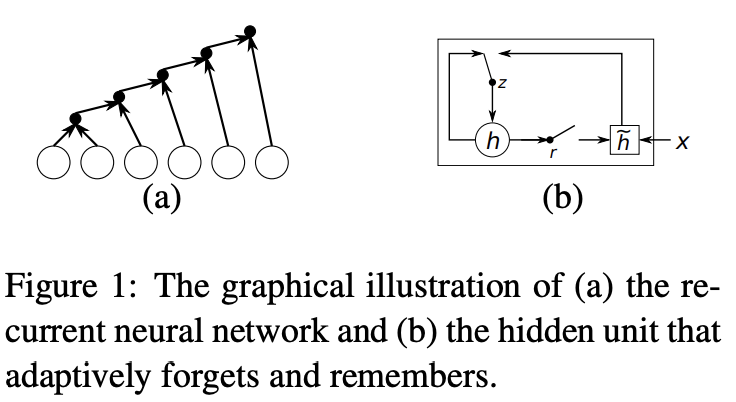

Gated RNN

Works on variable-length sequence x by maintaining hidden state h over time. Hidden state h at timestamp t is updated by

\[h^{(t)}=f(h^{(t-1)},x_t)\]This leads for RNN to learn the distribution over the next input

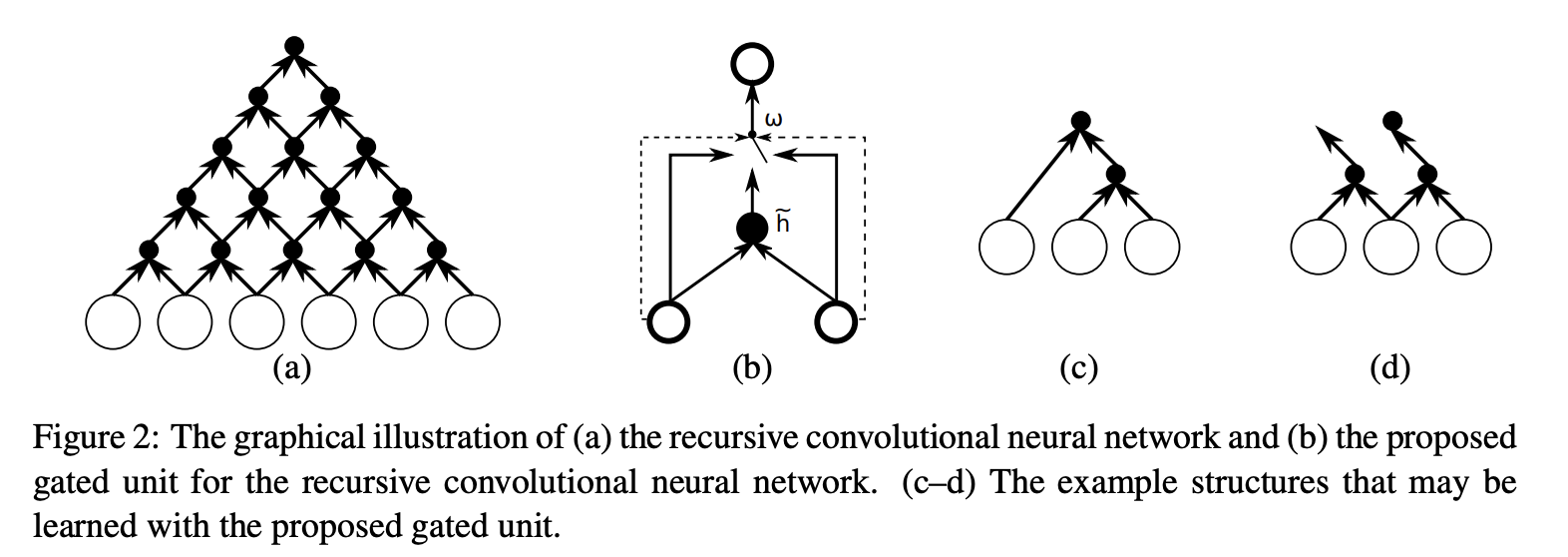

\[p(x_{t+1}|x_t,\ ...\ ,x_1)\]grConv (Gated Recursive Conv Net)

Notions

- Binary ConvNet whose weights are recursively applied to the input sequence until it outputs a single fixed-length vector.

Notations

\[\text{input sequence: }x = (x_1,x_2,...,x_T) \in \R^d\\ \text{weight matrices: } W^l, W^r, G^l, G^r\\ \text{recursion level: } t \in [1,T-1]\]How to compute?

-

Activation ot j-th hidden unit h is computed by

\[h_j^{(t)}=w_c\tilde{h}_j^{(t)}+w_lh_{j-1}^{(t-1)}+w_rh_j^{(t-1)}\\ h_j^{(0)}=Ux_j (U \text{ projects input into hidden space})\] -

New Activation h tilde is computed as

\[\tilde{h}_j^{(t)} = \phi \left( W^l h_{j-1}^{(t)} + W^r h_j^{(t)} \right)\\(\phi \text{ = element-wise nonlinearity})\] -

Gating coefficients w’s are computed by

\[\begin{bmatrix}\omega_c \\\omega_l \\\omega_r\end{bmatrix}= \frac{\exp \left( G^l h_{j-1}^{(t)} + G^r h_j^{(t)} \right)}{\sum_{k=1}^{3} \left[ \exp \left( G^l h_{j-1}^{(t)} + G^r h_j^{(t)} \right) \right]_k}\\ (G^l, G^r \in \mathbb{R}^{3 \times d})\]

Intuitions

- Activation of a single node chooses among (new activation, activation from left, activation from right).

- grConv may learn unsupervised parsing from input sequence

Experiment & Results

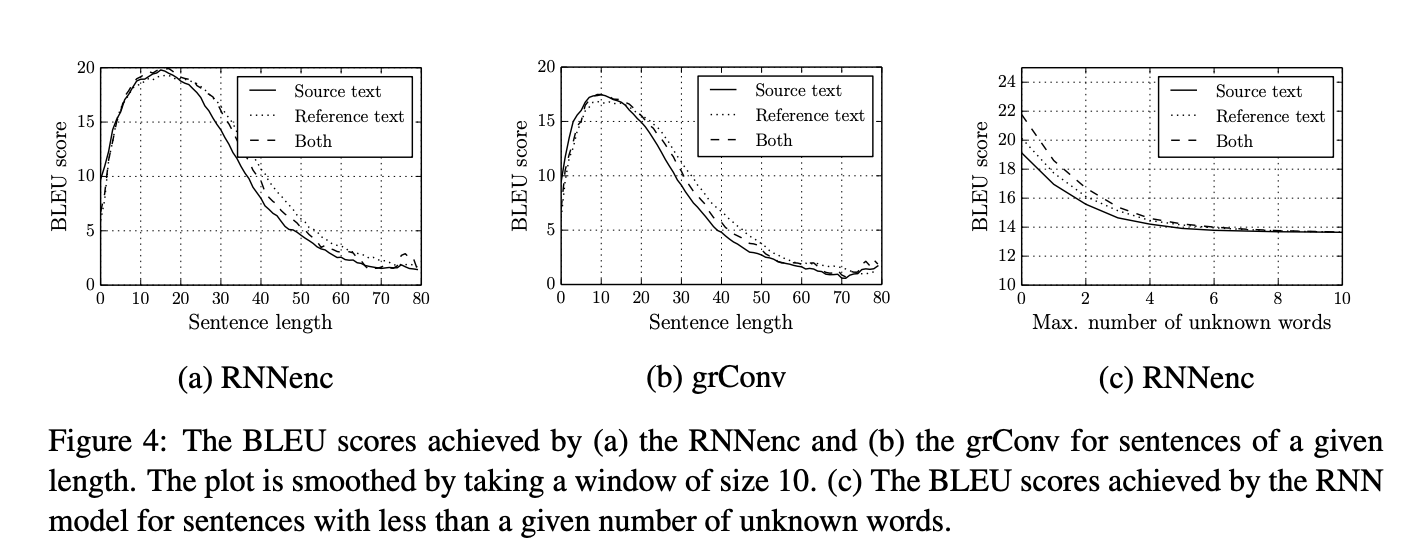

Evaluate encoder-decoder models on the task of English-to-French translation. Trained 2 models - RNN Encoder(RNNenc) and grConv. Both of them uses Gated RNN decoder.

Blue Score Results

Both models suffer significantly as the length of the sequences or the number of unknown words increases

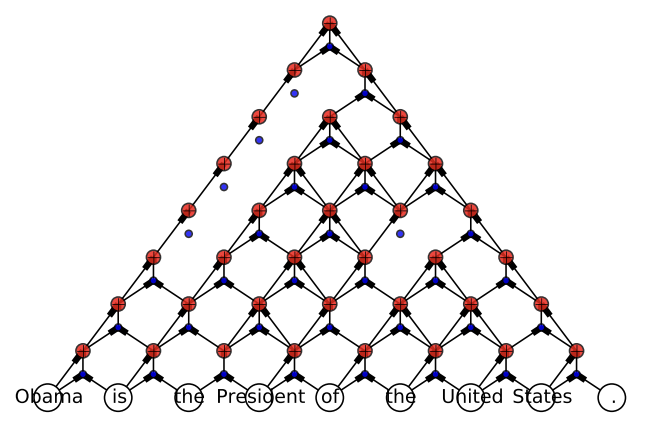

grConv’s parsing structure

Sample Sentence “Obama is the President of the United States” is parsed by grConv.

grConv extracts the vector representation of the sentence by first merging “of the United States” together with “is the President of” and finally combining this with “Obama is” and “.”, which is well correlated with our intuition.

Future Research Direction

- Toward larger vocabularies → Need to research for lowering computation and memory

- Prevent from underperforming with long sentences

- New Decoder architectures (Because both encoders in this paper suffers from long sentences, it may be the problem of Decoder)